AI in Film Production: How to Produce an Innovative Low-Budget Film

This article is also available in:

![]() Italiano (Italian)

Italiano (Italian)

In today’s article, I want to start by introducing you to a major project we are working on with the usual nExt, combining artificial intelligence and cinema. We are using AI for cinematic production in an immersive 360-degree film, initially projected in mobile domes. Leveraging all the latest technologies to be a memorable experience that will combine real and virtual. Basically: a milestone for what will be the cinema of the future.

It promises to leave a deep mark and represent a significant turning point in the history of cinema. For a more democratic cinema, more present among the people, uniting… What was the original cinema, and what it has failed to be for so long.

In this article I want to talk broadly, technically, about the basic idea. And share with you some possible areas of using artificial intelligence to get the most out of it on an, intentionally, small budget.

We can also consider it an update, at a much more advanced stage, of the last article written in February 2023 entitled. How to Make Low Budget Movies with Artificial Intelligence – First Steps. .

Indice

The revolutionary impact of Artificial Intelligence in cinema

At one time, special effects and narrative techniques were the magic wands of cinema, but today artificial intelligence is playing a revolutionary card. It is an incredible help, an accomplice that opens the door to unprecedented innovation. We are riding this wave, with AI by our side lending a hand in creating compelling stories, digital characters that feel real, and music that gets right to your heart. But let us not forget the human touch, the real beating heart of each of our creations.

“Artificial intelligence and cinema” is not a token motto, but the beginning of a new chapter in storytelling. We are ready to prove that the future of entertainment is no longer a distant dream: it is here, and it is animated by artificial intelligence.

Luna Dolph, Kyle and China: from virtual to real life

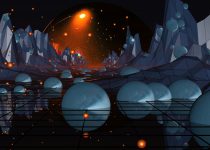

The story, scripted by Gérard Bernasconi , starts with the story of Luna Dolph, Kyle and China; not mere characters, but three-dimensional avatars breathing life into a fascinating and complex virtual world. These avatars are not only protagonists of a story, but symbols of our age. Vivid representations of our progressive immersion in the virtual universe, also known as the metaverse. This concept, once relegated to science fiction, is rapidly taking shape and influencing diverse industries, from video games to social networking platforms, transforming the way we interact, work and have fun.

Virtual Reality and Digital Identities

Our goal is not only to tell a compelling story, but to invite the audience to reflect on the nature of reality in a digital age. These avatars, with their complex interactions and intricate worlds, represent the ongoing fusion of our physical and digital lives. A convergence that is redefining the very meaning of identity, community and belonging.

At the core of our narrative we find Luna Dolph, Kyle and China. They are three three-dimensional avatars whose lives take place in a virtual world of extraordinary beauty. While existing in the digital ether, their story is a bridge to reality, a means of reminding our viewers of the irreplaceable value of human interaction and real life. In an age when digitization has transformed our ways of connecting, our narrative aims to use technology not as a refuge, but as a springboard to rediscover and reinvigorate authentic and tangible sociality.

Technology helps you live better

As Luna, Kyle and China navigate a fascinating metaverse, their experiences and challenges resonate with a clear message: technology, however advanced, is a tool that, when used wisely, can enrich but not replace the warmth and complexity of human connections. Our goal is to bring out the realization that despite the attractiveness of digital, real life takes place off the screen. In the shared laughter, handshakes, hugs and spontaneous moments that make up the fabric of our existence.

With this storytelling, we aspire to inspire viewers to lift their gaze from their devices and re-immerse themselves in the real world, enriching their lives with authentic experiences. Through the exploration of virtual worlds, we want to celebrate and promote the beauty and irreplaceable importance of real life and human sociality.

The film will represent the first outing in the real world for the three main characters.

How we use AI in film production

We are still in the pre-production stage, so from a practical point of view I will keep you updated in the coming months. For now, we have a rough idea: we have selected a compendium of the latest artificial intelligence (AI) technologies that are both affordable and available to all.

The film will be in fulldome, immersive 360×180-degree format. We will basically project it into domes and planetariums. This is a crucial innovation looking to the future as we push more and more toward total immersiveness. And we have a limited budget, let’s say between 10 and 20,000 euros at a glance. The film will last about 40 minutes, and will be about 30 percent in the virtual world of Luna and her friends (entirely recreated in Unreal Engine ), for the remaining 70% in the real world.

Using Luma AI for the cinema of the future

To begin talking about this technological arsenal I mention the following Luma AI, a pioneering solution that completely revolutionizes the generation of three-dimensional environments. Luma AI allows us to reproduce real environments within Unreal Engine, in photorealistic quality and even with an iPhone. Employing advanced technologies such as the Neural Radiance Fields (NeRF) and the brand new Gaussian Splatting (the latter published just three months ago by the University of the Côte d’Azur, France), we can capture the complexity and richness of the real world in digital format, put it into Unreal Engine (including the ability to move freely within the scene) and bring to life scenes previously relegated only to large budgets.

We lower the costs associated with creating detailed settings and complex objects, as well as streamline and speed up the production process. Luma AI not only democratizes access to world-class technologies, but also gives us the tools to experiment at a speed that was previously unimaginable. And it allows us to impart an unprecedented level of vividness and depth to our scenes.

Skybox AI for cinema – Simplified lighting on Unreal Engine

Another crucial tool in our repertoire is Skybox AI by Blockade Labs , for creating immersive skyboxes. Enriching virtual scenes with vital details about lighting and setting.

A skybox in Unreal Engine not only provides visually convincing surroundings (mountains, sky, distant houses, etc…), but also affects the overall lighting of 3D assets within the scene. This is what interests us most in filmmaking: it acts as an ambient light source, reflecting its colors and hues on objects, helping to create a consistent and realistic atmosphere. For example, a skybox depicting a sunset will infuse warm orange and red hues on the scene; while a night skybox will provide a cooler, dimmer light. This process helps integrate 3D assets into the surrounding environment, making the entire visual experience more immersive and coherent.

Aspect that proves essential in creating 360-degree fulldome environments. Every tiny detail is critical to sustaining the illusion of a fully immersive world.

Using Kaiber AI for our film

Kaiber AI is useful and quality, but I have a serious doubt: I don’t know if it works with fulldome video. I haven’t had time to experiment with it yet, but it will possibly help smooth out the scenes so that the assets can be merged even better. It works through AI directly on the final video files.

Artificial intelligence and cinema: Reverie AI

In anticipation of the launch of Reverie AI , we are stimulated by its promises to create virtual worlds for Unreal Engine almost by “copying” a pre-existing picture. Its ability to generate scenery that faithfully mimics reality, combined with its potential in color correction of virtual scenes, opens doors to unlimited possibilities in visual storytelling. Reverie AI promises to be an excellent complement to our workflow, improving visual consistency and ensuring smooth and convincing transitions between the virtual and real worlds.

Move.ai, cheap and working mocap

Another “smart” tool we plan to use for our film will be Move.ai, specifically the inexpensive service Move One (on launch offer at $15 a month, then expected to cost $30). With a simple iPhone app, we will be able to create simple, ready-made custom animations without too many fixes or clean-ups. This reduces time and cost, allowing you not to be limited by the animations already available in services such as Mixamo , ActorCore or the MoCap Market by Noitom.

Here a very quick video test:

Clearly, they also have the more expensive professional service that allows up to 8 rooms to be used. But we do not count on using it for this first production unless there is a very real need.

Speech-to-speech for film dubbing… Is it possible?

In our production, innovation does not stop with the creation of the digital world; in fact, we also want to take advantage of the ongoing technological revolution in traditional aspects of filmmaking, such as dubbing.

The voice of Luna and the other 3D avatars, must be consistent (not to say identical) in all media, both in the virtual and real worlds. We face a complex challenge: maintaining the same voice across multiple platforms, including social media and especially real-time streaming, without tying ourselves to a single voice actor. This allows us to have flexibility in storytelling and to adapt to various formats without depending on the availability of a specific actor.

The idea is to replicate the voices of some real actors and associate them with avatars (Luna, Kyle, and China for starters), then transforming any actor’s voice, in real time, into Luna’s, to use it for both dubbing movies and social content (as much in the original language as in translations), and for the live streaming superimposing these entries on the animated avatar in motion capture.

From the excellent but expensive Respeecher, to the ambiguous Voicemod

We explored options such as Respeecher , an advanced speech-to-speech conversion tool, but the costs for real-time are prohibitive. We’re talking about 1,000 or 2,000 a month for a few hours of use. Voicemod presents itself as a cheaper solution because of its AI voices, although there are conflicting rumors about its reliability (some even consider it to be malware or cryptojacking…). And there remains the problem of not having the rights to the voice, which they own, which will certainly prove to be a problem in the future. I do not yet know the cost for real-time conversion of Resemble.ai , which I have used in the past for the much cheaper text-to-speech, and of Veritone Voice .

Another tool that I have not been able to test is Voidol 3 , at a cost of about $300. I couldn’t find a demo version, but I admit I didn’t even try that hard to request one. It is one of several Oriental software created because of the typical Japanese passion for the anime world. But actually adaptable to our purpose, as we will see in a moment with another Japanese.

MetaVoice Live, Mangio RVC Fork and W-Okada Voice Changer, free and open-source

The final solutions, after much research, are. MetaVoice Live and the Voice Changer from W-Okada. Both open source, which reassures us that we can base the “future life” of the characters on these services, and both free. I cannot fail to mention the YouTube channel AI Tools Search which has been most useful to me. Among the most interesting videos in this area, certainly this one:

I particularly like MetaVoice: it is under heated development and has a cloud version for non-real-time conversions that provides greater quality and flexibility. This one is not free, but the cost between $10 and $25 per month can all in all be dealt with.

In contrast, W-Okada ‘s Voice Changer has many independent developers developing solutions compatible with it, such as GitHub user Mangio621 who created the Mangio RVC Fork , a software with Web interface that can transform the voice of a real actor into that of the chosen voice model. Many of these models, especially famous people, are already available on sites such as Voice-Models.com in “.pth” format. A classic format used in machine learning), but the best thing is that with the same Mangio RVC we can perform training, or training, of a custom voice. All locally thanks to a good video card. And therefore clearly always available, and for free.

I will do a specific article on this shortly, however. So you will follow me as I do some interesting tests.

Generating video with artificial intelligence

One use of AI in film production may be text-to-video, or video-to-video. But how useful can generating videos with artificial intelligence be? I mean right from scratch, describing to the AI in text (or with a very simplified video reference) what you want to achieve. Somewhat the future, to date tools like Runway Gen-1 , Genmo AI , o Moonvalley AI are little more than experiments. Useful at some junctures, but far from the quality and realism needed for a film production.

Evidently, we will have to work for a few more years to get our films 🙂

Canon EOS R5C and Dual Fisheye lens for 3D fulldome video

What about live action filming? In our journey, we are trying our hand at a very exciting combination of equipment. We always keep the goal in mind: little expense, much return. I had originally planned to shoot everything in full 360 degrees, also to make it already compatible in case of future virtual reality porting. But the cost became prohibitive, both for the camera (a professional camera like the Insta 360 Titan , which by the way has not been updated for years, costs more than 17,000 euros), and for the difficulties of starting from the very beginning with such a wide field.

So the idea is to produce the right video for the dome, in 360×180 degrees (basically half the sphere). And the lens Canon RF 5.2mm F2.8 L Dual Fisheye , paired with the Canon EOS R5C , should prove to be a winning choice. This setup not only provides immersive images, but also allows us to experiment with stereoscopic shots, which add an extra level of depth and realism.

The Canon EOS R5C camera, with its dynamic range between 11 and 12 stops and 8K sensor, offers excellent value for money. This is an important consideration for us, as we are trying to maintain a balanced budget without sacrificing quality. We will also consider whether to rent it … From Adcom , lens and camera are offered at about 200 euros daily.

To be honest, we can’t fully exploit the potential of stereoscopy yet because of projection, but it is definitely something we would like to explore in the future. It is one of those things that looks really cool and could add a special touch to our project. Have you ever projected stereoscopic fulldomes or do you have any suggestions on how we could integrate it into our work? I would be happy to hear your thoughts and ideas.

And if it will be lacking in anything compared to more emblazoned rooms…. Again, we count on leveraging AI for video quality improvement. But research, in the field, still needs to be done.

Conclusions

In the end, our choice of digital tools and equipment reflects a desire not to compromise on quality while keeping an eye on the budget.

In short, we are creating something beyond traditional cinema. Thanks to artificial intelligence and cutting-edge technology, the “cinema of the future” is no longer a dream. It is real, we want to see it happen. And with the genius of Gérard Bernasconi to the screenplay (who also gave us a great technical contribution), the precision of Michela Sette in the role of VFX Supervisor and the creativity of Michele Pelosio as director, we are forging a revolutionary cinematic experience. Get ready, because we are about to take you to a world where cinema and reality merge into a transcendental experience 🙂